The Role of Last-Level Cache Implementation for SoC Developers

May 12, 2020by库尔特·舒勒(Kurt Shuler),动脉There is a challenge for SoC developers to find ways to navigate the demand of memory in their design. This article looks at how a fourth, or last-level, cache can provide a solution.

One of the weird paradoxes of our time is that microprocessors are faster than memories. It’s strange because microprocessors are complex, billion-transistor circuits while DRAMs are just… rows and rows of identical memory cells. Processors are expensive, but DRAMs are practically commodities sold by the pound.

我们作为产品设计师的工作之一是主要通过使用缓存来解决有关此问题的解决方案。我们将处理器包裹在第一,第二,有时是第三级缓存中,以缓冲快速处理器和相对较慢的DRAM之间的延迟和带宽不匹配。数十年来一直有效,现在仍然有效。

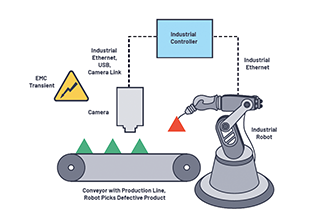

Figure 1:最后一个级别的缓存(也称为系统缓存)减少了对芯片内存的访问数量,从而减少了系统的延迟和功耗,同时增加了可实现的带宽。它通常是在内存控制器前的芯片DRAM或闪存之前就位于物理位置的。

Machine learning (ML) is making this design more challenging. ML inference requires lots and lots of data, the same way that graphics convolutions or digital signal processing (DSP) tear through big, memory-resident data structures. New SoC designs for ML don’t just need fast processors – they need fast access to memory, too. Otherwise, all that CPU muscle goes to waste.

解决内存挑战的选项

Memory manufacturers have started developing all sorts of new DRAMs to bridge the gap. We’ve got high-bandwidth memory (HBM) with 1Gbit/sec theoretical bandwidth, and even HBM2 and HBM2E, which promise somewhere around 3Gbit/sec. And there are even more workaround options like stacked 3D SRAMs using wireless inductive coupling to achieve triple the HBM2E bandwidth. But getting to that kind of speed requires tricky and expensive manufacturing tricks with multichip modules, vertically stacked silicon die, silicon interposers, through-silicon vias (TSVs), 256-bit and 1024-bit buses, and/or silicon micro-bumps.

像GDDR6这样的图形记忆呢?他们便宜又丰富。当然,他们也可以为ML工作量完成工作吗?不太快。顾名思义,GDDR6接口旨在用于图形卡,并且已经排在第六代。好消息是,图形芯片/卡是一个大批市场。坏消息是,这是一个短暂的市场。尽管这很容易在主流PC市场上获得组件和技术的背包,但这通常是令人失望的秘诀。当主流继续前进时,您将搜索有限供应商的过时组件。SOC设计师需要将符合设计生命的组件和界面,不要介意该产品在现场的生命。

Adding a Fourth (Last-Level) Cache

那么,最好的内存解决方案是什么?对于提示,我们可以查看其他公司正在做什么。拆除分析表明,Apple一方面通过添加另一个缓存来解决速度不匹配问题。如果一家拥有几乎无限的研发资源设计的大公司围绕其SOCS瓶颈设计,那么可能值得研究。

诀窍是不要将缓存放在处理器附近。它是违反直觉的,但可以起作用。大多数高端嵌入式处理器,例如ARM Cortex A系列,每个CPU核心都将具有L1和L2缓存。有时,处理器综合体也具有L3缓存,并在所有CPU内核中共享。一切都很好。无需调整。

现在,添加第四个缓存 -last-level cache– on the global system bus, near the peripherals and the DRAM controller, instead of as part of the CPU complex. The last-level cache acts as a buffer between the high-speed Arm core(s) and the large but relatively slow main memory.

This configuration works because the DRAM controller never “sees” the new cache. It just handles memory read/write requests as normal. The same goes for the Arm processors. They operate normally. No extra cache coherence hardware or software is required. Like all good caches, this last-level cache is transparent to software.

最后一级缓存的优缺点

像所有优质的缓存一样,最后一级的缓存有助于显着提高性能,而无需诉诸异国情调或昂贵(或定义不明)的记忆技术。它使您的工作变得更好。具体而言,它可以改善延迟和带宽,因为缓存是片芯上的,并且速度远远超过片状DRAM,并且由于与CPU群集的连接更宽,更快。这是双赢。

缺点是什么?当然,缓存占据了死亡区域。缓存控制逻辑(托管标签,查找表等)可以忽略不计,但是缓存RAM本身使用可测量的空间。另一方面,最后一级的缓存节省了功耗,因为没有什么比阅读或从外部DRAM阅读或写作更能消耗能量,尤其是当您像现代工作负载一样锤击DRAM时。保持芯片的每笔交易都可以节省大量的动力和时间。

There’s also a security benefit. On-chip cache transactions can’t be snooped or subjected to side-channel attacks from signal probing or RF manipulation. Caches are tested, well-understood technology. That doesn’t mean they’re simple or trivial to implement – there’s a real art to designing a good cache – but at least you know you’re not beta-testing some vendor’s proprietary bleeding-edge memory interface.

To dive deeper into last-level cache implementation you can readCodacache:帮助打破内存墙, a technical paper that describes last-level cache implementation for SoC developers. It’s designed as drop-in IP that can be included in SoC design without resorting to weird, expensive, or evolving technologies.

行业文章是一种内容的一种形式,可让行业合作伙伴与所有有关电路读者的新闻,消息和技术分享有用的新闻,并不适合编辑内容。所有行业文章都遵守严格的编辑准则,目的是为读者提供有用的新闻,技术专长或故事。行业文章中表达的观点和观点是合作伙伴的观点和观点,而不一定是巡回演出或其作家的观点和观点。